LiteLLM

Run 100+ LLMs behind one OpenAI-compatible endpoint with logging, auth, and cost controls.

Overview

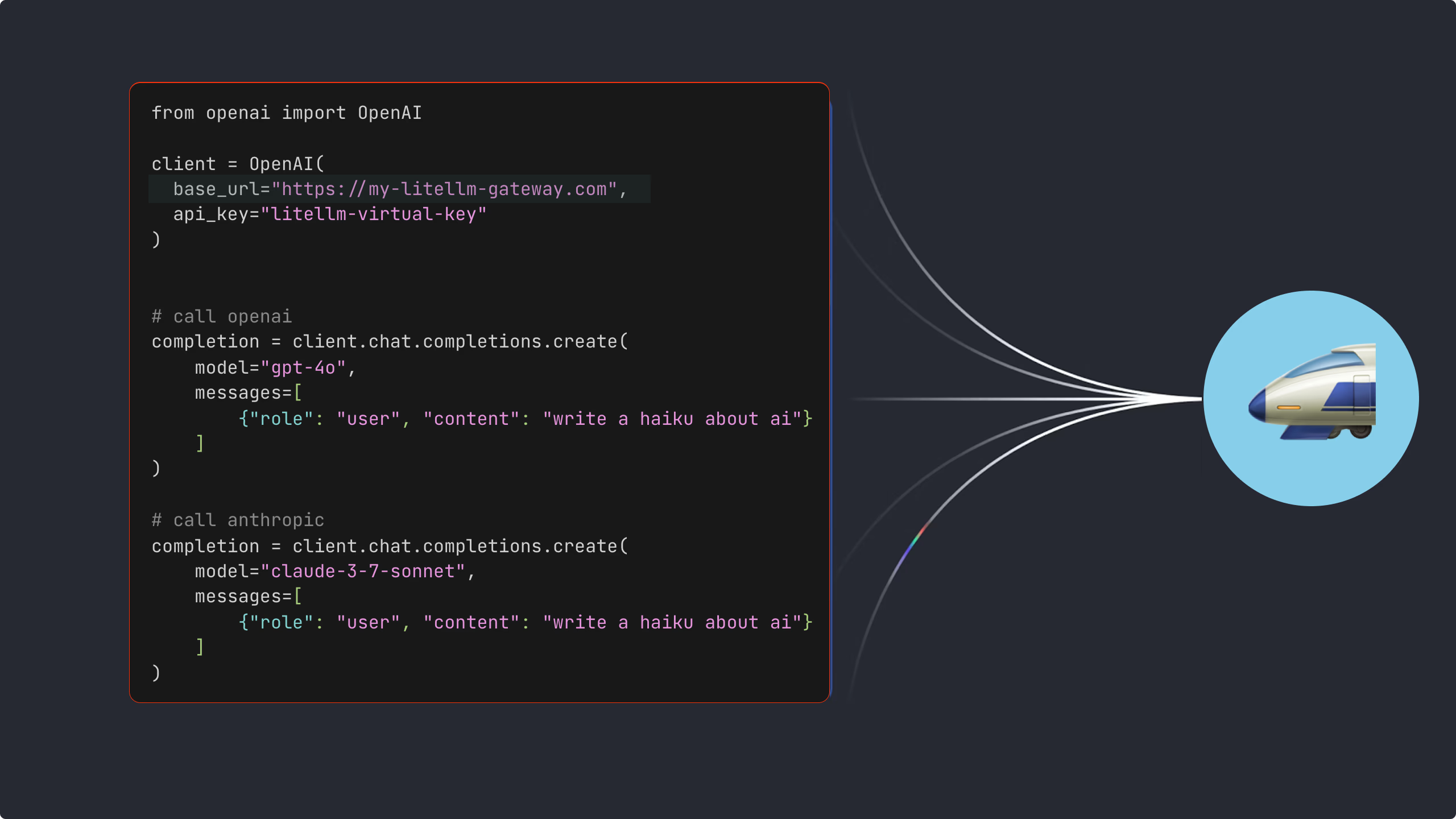

LiteLLM is an open-source gateway that lets you call 100+ LLM APIs using the OpenAI API format. It simplifies authentication, handles load balancing, and tracks usage and costs across providers. Designed for developers who want a unified, efficient way to integrate LLMs into their applications.

Features

Supports 100+ LLM APIs including OpenAI, Anthropic, Cohere, HuggingFace, and more.

Unified OpenAI API format across all backends.

Built-in authentication and per-key rate limiting.

Tracks spend and usage across providers.

Supports load balancing and failover.

Lightweight and easy to deploy via Docker.

Pricing

7 day free trial!

Host With Us

- Flat monthly pricing per app

- No configuration know-how needed

- Access and manage apps privately from any browser

- Try apps hassle-free, keep the ones you love!

- Apps run on a private VPS

- Automated, end-to-end encrypted backup and recovery service included

- Clovyr technical support

Bring Your Own Host

- Free

- No configuration know-how needed

- Access and manage apps privately from any browser

- Try apps hassle-free, keep the ones you love!

- Apps run in the cloud account you already have

- Automated, end-to-end encrypted backup and recovery service not included

- Clovyr technical support not included

- No Clovyr account required

Add Clovyr secure backup and recovery service for $5/app